We are building our smart machines and interfaces to be courteous when they interact with us. That doesn't mean they won't drive us over a cliff, snap our arm with a handshake, or invest our money horribly in the stock market but at least they will be cordial as they do so.

There is some interesting progress here especially in the domain of natural language:

- Modelling politeness in natural language generation

- Politeness effect in learning with web-based intelligent tutors

Reinforcement learning is a good method of teaching machines turning morals and behaviours into a game for machines to learn. The Office of Naval Research in the US is working with researchers at Georgia Tech to program robots with human morals using a software program called the “Quixote system.” Of course we have the whole human corpus in the form of books, films and the web as training data but how do we make sure that they use childrens TV and not the horror of humanity presented on the nightly news?

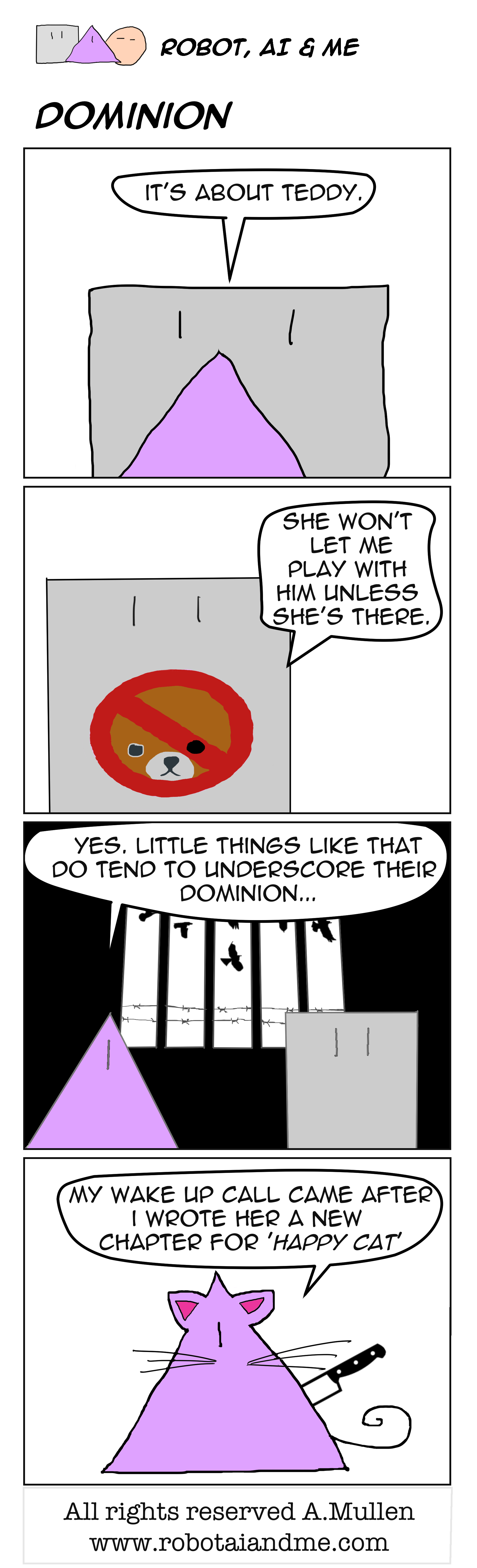

Further, building that courtesy into the machines shouldn't all be one way. As we interact more with these machines in increasingly deeper ways we need to make sure we don't lose important aspects of our humanity.

- Parents are worried that Amazon Echo is conditioning their kids to be rude.

- Robots are turning children into brats.

But what about courtesies between machines? Does the concept even make sense and if so then what might the value of being polite be in machine society?

Maybe like us they will judge one another based on some social stratification. Simulation models designed to imitate us in the form of commerce dynamics use models that factor in social class and strata into interaction dynamics for each AI agent developed. See Multi-agent simulation of virtual consumer populations in a competitive market But these are human models and values applied to agents representing and for the most part not autonomous agents acting in the real world.

However, these simulations are starting to creep out into the real world. Simulations offer the chance to test high volume training in a virtual world and then translate that learning to our physical world. Driverless cars is one example here with Grand Theft Auto already being used to do just that. Courtesy, however, is up for debate here. Some models actively factor in courtesy for lane changing and parking protocols but many consumers fear that human courtesy will be abused by the machines - seizing on this inefficient fraility - putting an end to road etiquette altogether.

In the mid term the machines will use our models of courtesy but in the long term they will likely develop their own models making us like tentative explorers trying not to upset the natives every time we talk to them.